Test

The Test functionality requires subscription to Automation.

The Test action on the Execution ribbon allows you to test the your decision logic within the Decision Modeler environment by executing a selected decision service.

If the test functionality is grayed out, it is most likely that your model is not executable, for example because your model does not define decision logic.

Test Mode

Clicking the Test action on the Execution ribbon puts your model in Test Mode. In this mode, the model will become read-only and will display the test controls in a left-side panel and a test bar at the top.

Defining Test Parameters

In Test Mode, you select the test parameters in a left-side panel:

Service

What you are testing is always a particular decision service, even if you did not explicitly create one. For testing, Decision Modeler automatically creates several:

-

Whole Model Decision Service: Inputs are all input data, outputs are all decisions with no outgoing information requirements; all other decisions are encapsulated.

-

Diagram [Page Name], one per DRD page: Inputs and outputs same as Whole Model Decision Service.

-

[Decision name], one per decision: Inputs are information requirements of the decision, output is the decision output.

-

Whole model: Inputs are all input data, outputs are all decisions in the model.

The default service selected depends on the current page of your model when you go into Test Mode, but you can select any of the above-named services from the Service dropdown.

In

Below the Service dropdown are 5 tabs. The In tab specifies the input values for your selected decision service. There are four ways to supply input values, selected by the icons:

| Mode | Description |

|---|---|

HTML |

A web form when you type the inputs manually. See the Form section below for more information on the different widgets that compose the form. |

File |

An input to select or drop a file to be used as input for the service. The service accepts JSON, XML or Excel files. A template can be downloaded to have the expected format for each type of file. Note that the Excel file support is limited to models that do not contain collections as input. |

Test case |

A selector that allows to load a previously saved test case inside the HTML mode to edit and/or submit. |

Test Data (Require Automation) |

A web form that allows to persist each input as test data to be reused across models. Test data is shared across all users and keyed using the data type of the input. Therefore two models using a data type tPerson can be started using the same test data. This form also allows to manage the full life cycle of test data. See the Form section below for more information on the different widgets that allows to define the test data. |

Form

When HTML input is selected, the test interface presents a Form to input data.

Each input value has an associated data entry widget specific to the datatype defined for the input in the model. Note that if no data is entered in a field, the value is considered null except for Text inputs where it is considered an empty string. A null value can also be entered for a Text input by pressing the delete or backspace key.

Structures and collections will also have a caret to the left to their name to allow to expand or collapse them. They also have a null icon that will either be grayed out (the value is not null) or dark (the value is null). Clicking on the null icon nulls out the value of a structure or collection.

Collection inputs also offer an Excel button to import the data from a Microsoft Excel file. Please note that it may not be possible to represent complex data structures in an Excel file.

Running a Test

Once the service input values have been entered, you can run the test either by clicking the Submit button in the left-side panel or the Play button in the test bar at the top of the main panel. The service will run either to completion or a breakpoint. When the service completes or pauses, matching rules in decision tables are highlighted.

-

The square button stops the currently running service and allows new input values to be entered.

-

The play-pause button continues the service paused at a breakpoint.

-

The x button closes the test mode.

When the service is completed or paused, you can examine its data in one of three panels: Out, Trace, or Data.

Out

When the service completes, the output results are presented in the Out tab. From there, you can save the results as a Test Case or download the results as a JSON file.

Trace

The Trace view displays the executed service nodes. It is possible to click on them to obtain the detail of the inputs and outputs of each node. The inputs and outputs of the nodes are presented in a user-readable format and also raw as the events emitted for the ServiceNodeStarted/ServiceNodeFinished events.

The Trace view presents the history of everything that already occurred in the service.

Breakpoints

When hovering on the diagram, breakpoints overlay become visible when you mouse-over a supported node. Clicking on these icons will set/clear breakpoints. The following breakpoints can be set:

-

Before a decision: Stop before executing the decision logic of a decision

-

After a decision: Stop after executing the decision logic on a decision

-

Before an invokable (BKM, decision service): Stop before executing the decision logic of the invokable

-

After an invokable (BKM, decision service): Stop after executing the decision logic of the invokable

Breakpoints can be added/removed while the test session is active.

In modeling mode, it is also possible to display the breakpoints overlays using the Overlay right-side panel.

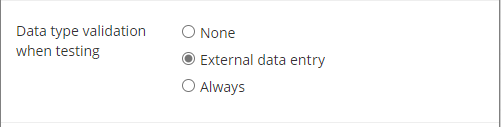

Data type validation

By default, test is done using the "None" Data type validation. You may change that by going to Preferences on the File menu.

Advanced

When testing complex models using a lot of business logic or included models, the deployment and/or execution times could be longer.

The Preferences dialog in the File menu offers advanced configurations for the testing of models.

Synchronize all dependent models when deploying and testing

This option is turned on by default. Before testing or deploying a model, model dependencies are loaded and synchronized with changes coming from the Digital Enterprise Graph. This process could require a significant amount of network processing and if the number of dependencies grows, it could slow down testing and deployment.

Disabling this option will cause the automation suite to use the already saved version of the dependencies also enabling the automation to cache the compiled version of those models to further speed up model compilation time on subsequent operations.

| Do not disable this option when deploying models with dependencies using operations which requires identities. |

Run the Test in "Output results only"

Turning on this option speeds up the execution time of tests by reducing the amount of debugging information generated and transmitted over the network to the modeler. Models that generate a large number of intermediate data will greatly benefit from turning on this feature.

| When this option is enabled, only the end result of the test is collected. The execution will not stop at breakpoints and the result will not be overlaid on the diagram. |