Digital Distributed Container Hosting

| A Digital Distributed Container subscription is required. |

Requirements

Digital Distributed Containers are built by the Digital Enterprise Suite by extending the engine base image with deployed services. You need to obtain a Digital Distributed Container image built by the Digital Enterprise Suite (either through automatic build on the environment or through the service library).

Digital Distributed Containers can be deployed on Kubernetes (including commercial offering such as Google GKE, Azure AKS, Amazon EKS, Red Hat OpenShift, …) or other services specially designed to run container.

The memory and cpu resources allocated can be tweaked from the deployment descriptors when installing but we recommend starting with the default values of:

-

Memory: 500MB to 1GB

-

CPU: 1

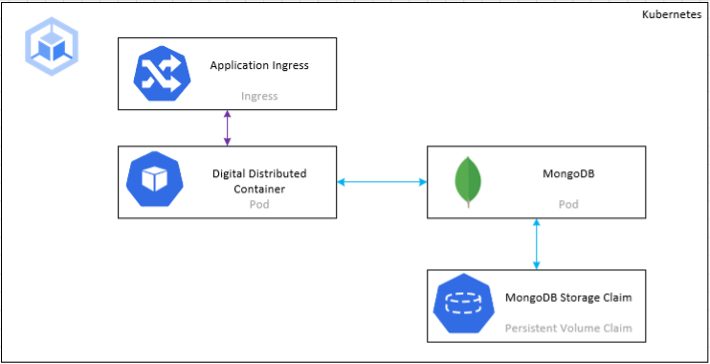

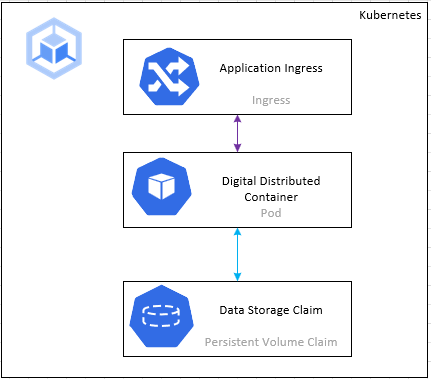

Architecture

The Digital Distributed Container (DDC) is designed to be deployed as a container on a container orchestrator such as Kubernetes.

A web interface is exposed through the ingress for all communications with the Digital Enterprise Suite. A second port can be accessed internally to gather usage metrics (monitoring).

Typically, when deployed, the web port will be secured by the ingress that exposes it as HTTPS and the second usage metric port will not be exposed outside of the cluster. An API Gateway can also be used as the ingress if only the API portion should be exposed.

Storage Considerations

There are three deployment models for DDC.

-

Stateless

-

Stateful using persistent storage

-

Stateful using MongoDB

When running stateless services with Decision and Workflow Models that don’t require user interaction or events, persistent storage isn’t necessary, allowing the removal of persistent volume, thus enhancing container scalability.

When running stateful services, storage will be required and offered in two flavors: persistent storage or MongoDB.

Persistent Storage allows for simple deployment without having to configure an external database container (MongoDB). However, it will limit the scaling of the container because on most container execution infrastructure, persistent storage can’t be attached in read/write to multiple pods and/or multiple nodes. If scaling beyond one pod/node is required, consider using MongoDB storage.

MongoDB storage allows the most flexibility at runtime since it can be replicated natively across data centers and redundant infrastructure.